Learning Hierarchical Relational Representations through Relational Convolutions

Awni Altabaa1, John Lafferty21 Department of Statistics and Data Science, Yale University

2 Department of Statistics and Data Science, Wu Tsai Institute, Institute for Foundations of Data Science, Yale University

Abstract

An evolving area of research in deep learning is the study of architectures and inductive biases that support the learning of relational feature representations. In this paper, we address the challenge of learning representations of hierarchical relations—that is, higher-order relational patterns among groups of objects. We introduce “relational convolutional networks”, a neural architecture equipped with computational mechanisms that capture progressively more complex relational features through the composition of simple modules. A key component of this framework is a novel operation that captures relational patterns in groups of objects by convolving graphlet filters—learnable templates of relational patterns—against subsets of the input. Composing relational convolutions gives rise to a deep architecture that learns representations of higher-order, hierarchical relations. We present the motivation and details of the architecture, together with a set of experiments to demonstrate how relational convolutional networks can provide an effective framework for modeling relational tasks that have hierarchical structure.

Method Overview

Compositionality—the ability to compose modules together to build iteratively more complex feature maps—is key to the success of deep representation learning. For example, in a feed forward network, each layer builds on the one before, and in a CNN, each convolution builds an iteratively more complex feature map. So far, work on relational representation learning has been limited to “flat” first-order architectures. In this work, we propose a compositional framework for learning hierarchical relational representations, which we call “relational convolutional networks.”

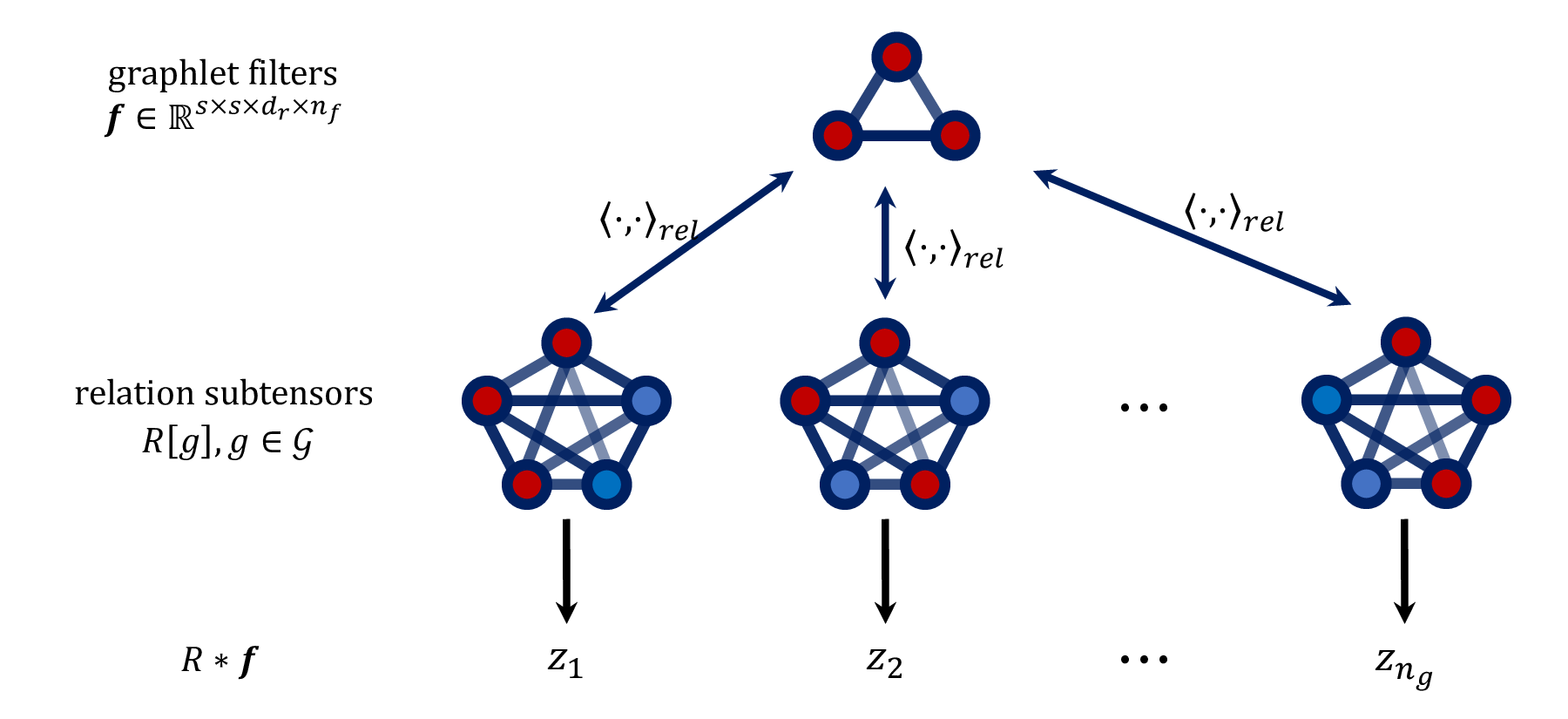

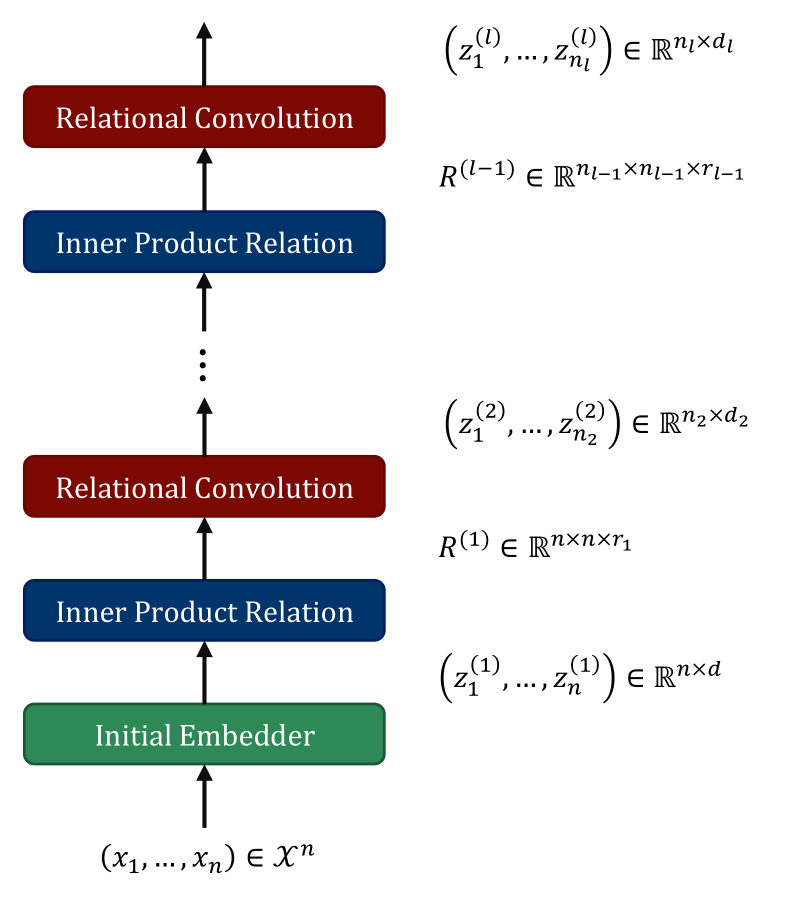

A schematic of the proposed architecture is shown in the figure to the right. The key idea involves formalizing a notion of “convolving” a relation tensor, describing the pairwise relations in a sequence of objects, with a “graphlet filter” which represents a template of relations between subsets of objects. Each composition of those operations computes relational features of a higher order.

Multi-Dimensional Inner Product Relation Module. The “Multi-dimensional Inner Product Relation” (MD-IPR) module receives a sequence of objects \(x_1, \ldots, x_m\) as input and models the pairwise relations between them, returning an \(m \times m \times d_r\) relation tensor, \(R[i,j] = r(x_i, x_j)\), describing the relations between each pair of objects.

Relational Convolutions. The relational convolution operation does two things: 1) extracts features of the relations between groups of objects using pairwise relations 2) transforms the relation tensor back into a sequence of objects, allowing it be composed with another relational layer to compute higher-order relations. In a relational convolution module, we learn a set of “graphlet filters,” which form a template of relations among a subset of the objects (a graphlet). The output of a relational convolution operation is a sequence of objects \(R \ast \boldsymbol{f} = \left(\langle R[g], \boldsymbol{f} \rangle_{\mathrm{rel}} \right)_{g \in \mathcal{G}} = (z_1, \ldots, z_{n_g})\) where each output object represents the relational pattern within some group of input objects, obtained through an appropriately-defined inner-product comparison with the graphlet filters.

Modeling groups. Rather than considering the relational patterns within all groups of objects, we explicitly model and determine relevant groupings through an attention operation.

Please see the paper for more details on the proposed architecture.

Experiments

We evaluate our proposed architecture on two sets of relational tasks: relational games and SET. We compare against previously proposed relational architectures, PrediNet and CoRelNet. We also compare against Transformers. Please see the paper for a description of the tasks and the experimental set up. We include a preview of the results here.

Relational games. The relational games benchmark allows us to evaluate out-of-distribution generalization. The dataset consists of a series of classification tasks involving a set of objects. In the figure below, we show generalization performance on two sets of objects different from those used during training.

SET. SET is a card game which forms a simple but challenging relational task. To solve the task, one must process the sensory information of individual cards to identify the values of each attribute, then somehow search over combinations of cards and reason about the relations between them. This is a task which tests a model’s ability to represent and reason over relations among subgroups of objects. This is a capability built explicitly into relational convolutional networks, but is missing from other relational models.

Finally, we explore the geometry of the learned representations in relational convolutional networks. As a preview, the figure below depicts the geometry of the relational convolution operation, demonstrating its ability to learn compositional features.

Experiment Logs

Detailed experimental logs are publicly available. They include training and validation metrics tracked during training, test metrics after training, code/git state, resource utilization, etc. Experimental logs for all experiments in the paper can be accessed through the following web portal.

Citation

@misc{altabaa2024learninghierarchicalrelationalrepresentations,

title={Learning Hierarchical Relational Representations through Relational Convolutions},

author={Awni Altabaa and John Lafferty},

year={2024},

eprint={2310.03240},

archivePrefix={arXiv},

primaryClass={cs.LG},

url={https://arxiv.org/abs/2310.03240},

}